regularization machine learning example

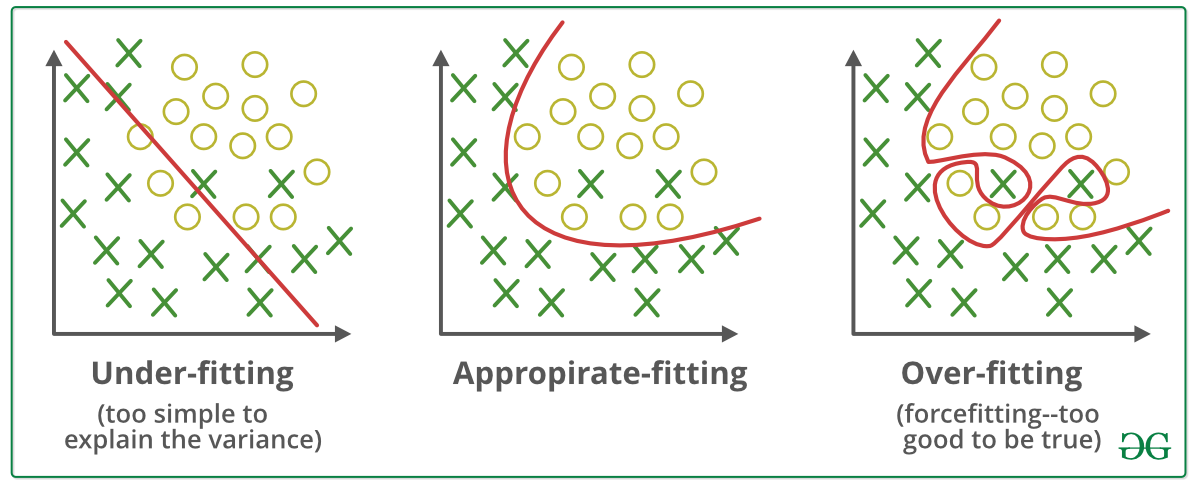

Based on the approach used to overcome overfitting we can classify the regularization techniques into three categories. It is a technique to prevent the model from overfitting by adding extra information to it.

Regularization In Machine Learning Programmathically

Regularization helps the model to learn by applying previously learned examples to the new unseen data.

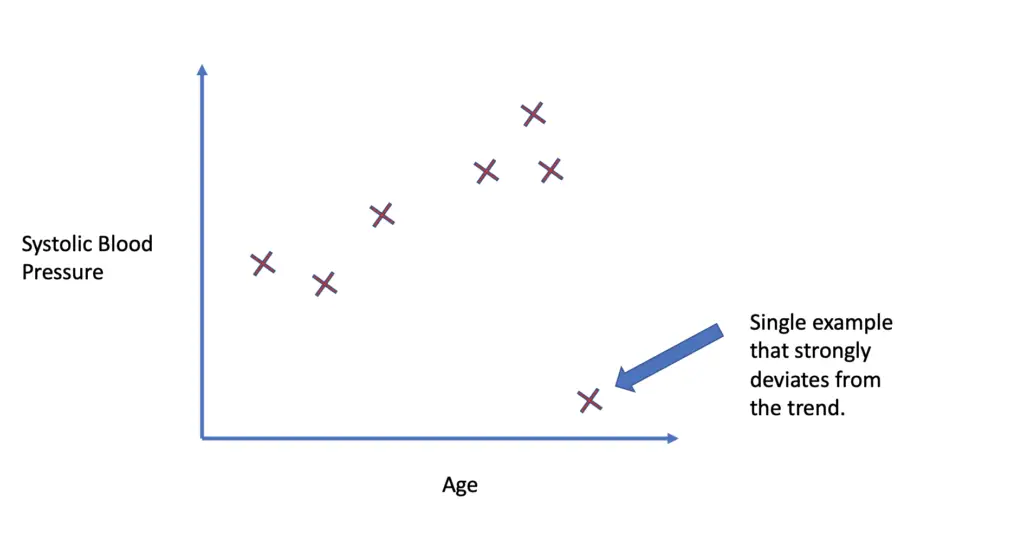

. To avoid this we use regularization in machine learning to properly fit a model onto our test set. Part 2 will explain the part of what is regularization and some proofs related to it. By noise we mean the data points that dont really represent.

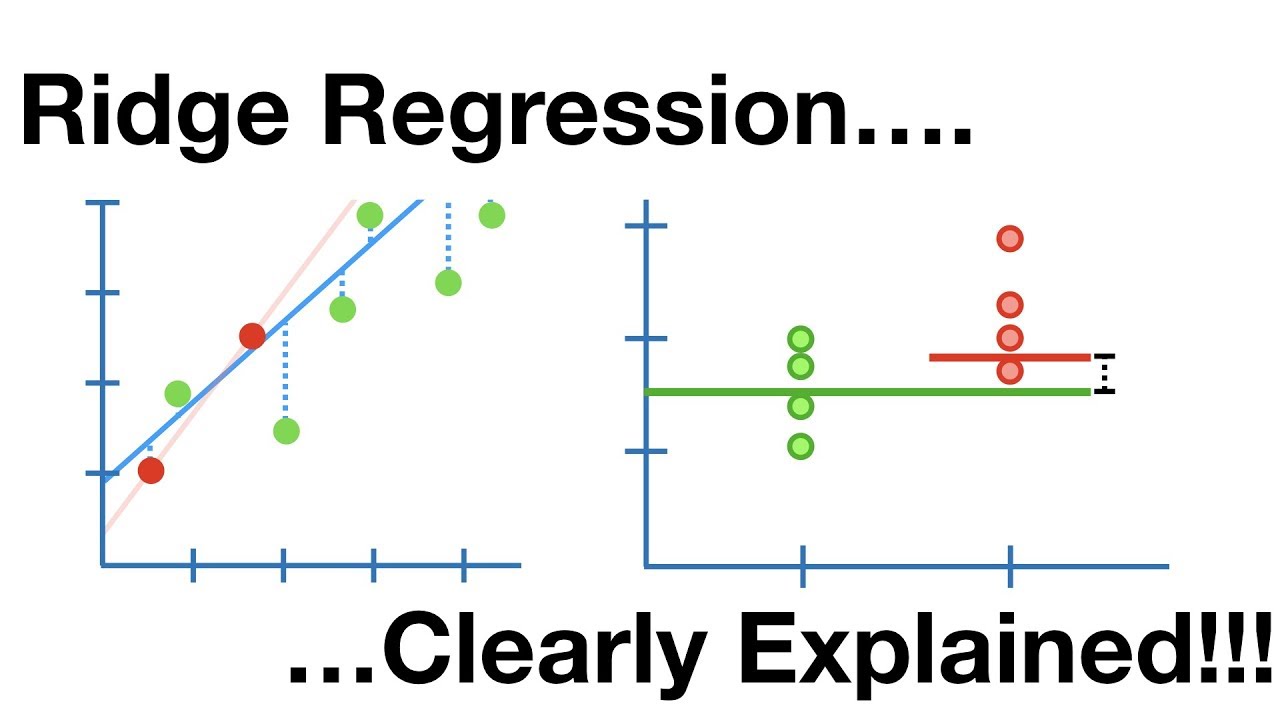

It is a type of regression. The simple model is usually the most correct. This penalty controls the model complexity - larger penalties equal simpler models.

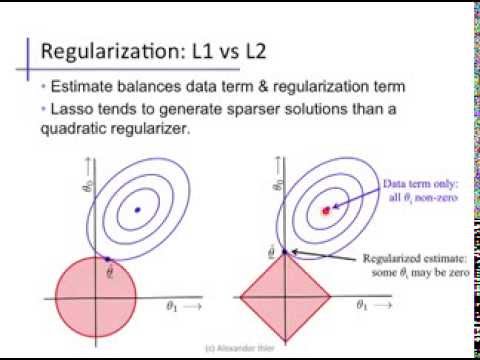

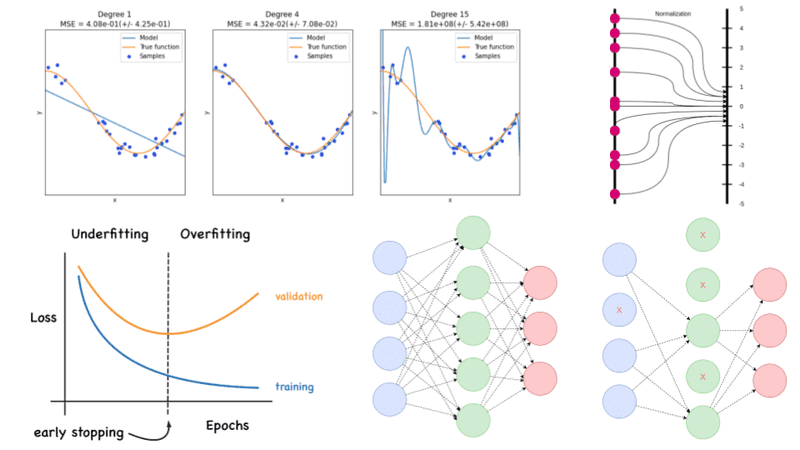

You can also reduce the model capacity by driving various parameters to zero. Poor performance can occur due to either overfitting or underfitting the data. Overfitting is a phenomenon where the model.

We do this in the context of a simple 1-dim logistic regression model Py 1jxw gw 0 w 1x 1 where gz 1 expf zg 1. Red curve is before regularization and blue curve. The commonly used regularization techniques are.

Part 1 deals with the theory regarding why the regularization came into picture and why we need it. Regularization techniques help reduce the chance of overfitting and help us get an optimal model. Regularization is an application of Occams Razor.

Each regularization method is marked as a strong medium and weak based on how effective the approach is in addressing the issue of overfitting. In machine learning regularization is a technique used to avoid overfitting. Regularization is a strategy that prevents overfitting by providing new knowledge to the machine learning algorithm.

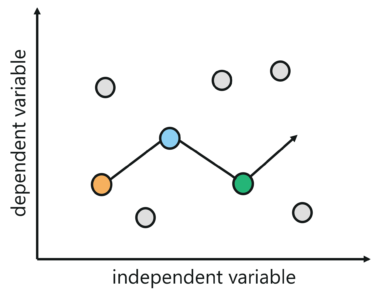

The general form of a regularization problem is. Regularization will remove additional weights from specific features and distribute those weights evenly. How well a model fits training data determines how well it performs on unseen data.

50 A simple regularization example. It means the model is not able to predict the output when. Types of Regularization.

But here the coefficient values are reduced to zero. While training a machine learning model the model can easily be overfitted or under fitted. Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting.

I have covered the entire concept in two parts. One of the major aspects of training your machine learning model is avoiding overfitting. This occurs when a model learns the training data too well and therefore performs poorly on new data.

It deals with the over fitting of the data which can leads to decrease model performance. θs are the factorsweights being tuned. Sometimes the machine learning model performs well with the training data but does not perform well with the test data.

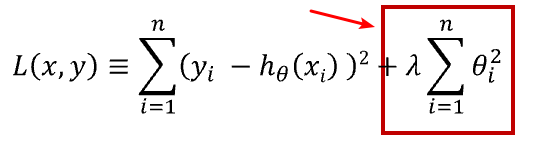

Regularization helps to solve the problem of overfitting in machine learning. You will learn by. λ is the regularization rate and it controls the amount of regularization applied to the model.

We can regularize machine learning methods through the cost function using L1 regularization or L2 regularization. In this article titled The Best Guide to. In laymans terms the Regularization approach reduces the size of the independent factors while maintaining the same number of variables.

Both overfitting and underfitting are problems that ultimately cause poor predictions on new data. This video on Regularization in Machine Learning will help us understand the techniques used to reduce the errors while training the model. This is an important theme in machine learning.

Regularization is one of the basic and most important concept in the world of Machine Learning. Regularization is one of the techniques that is used to control overfitting in high flexibility models. This happens because your model is trying too hard to capture the noise in your training dataset.

Regularization is one of the most important concepts of machine learning. Regularization is one of the important concepts in Machine Learning. 6867 Machine learning 1 Regularization example Well comence here by expanding a bit on the relation between the e ective number of parameter choices and regularization discussed in the lectures.

While regularization is used with many different machine learning algorithms including deep neural. The model will have a low accuracy if it is overfitting. It is one of the key concepts in Machine learning as it helps choose a simple model rather than a complex one.

L1 regularization adds an absolute penalty term to the cost function while L2 regularization adds a squared penalty term to the cost function. Let us understand how it works. Ridge Regression also called Tikhonov Regularization is a regularised version of Linear Regression a technique for analyzing multiple regression data.

Regularization is a method to balance overfitting and underfitting a model during training. This article focus on L1 and L2 regularization. Regularization in Machine Learning.

Regularization helps to reduce overfitting by adding constraints to the model-building process. Regularization is a technique to reduce overfitting in machine learning. This allows the model to not overfit the data and follows Occams razor.

As data scientists it is of utmost importance that we learn. Overfitting occurs when a machine learning model is tuned to learn the noise in the data rather than the patterns or trends in the data. By Suf Dec 12 2021 Experience Machine Learning Tips.

It is a type of Regression which constrains or reduces the coefficient estimates towards zero. As seen above we want our model to perform well both on the train and the new unseen data meaning the model must have the ability to be generalized. Regularization is a method of rescuing a regression model from overfitting by minimizing the value of coefficients of features towards zero.

By the process of regularization reduce the complexity of the regression function without. Its selected using cross-validation. In machine learning regularization problems impose an additional penalty on the cost function.

Understand L2 Regularization In Deep Learning A Beginner Guide Deep Learning Tutorial

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

L1 And L2 Regularization Youtube

Regularization In Machine Learning Simplilearn

Linear Regression 6 Regularization Youtube

What Is Regularization In Machine Learning Quora

Regularization In Machine Learning Regularization In Java Edureka

Which Number Of Regularization Parameter Lambda To Select Intro To Machine Learning 2018 Deep Learning Course Forums

Difference Between L1 And L2 Regularization Implementation And Visualization In Tensorflow Lipman S Artificial Intelligence Directory

Regularization In Machine Learning Geeksforgeeks

Regularization Of Linear Models With Sklearn By Robert Thas John Coinmonks Medium

Regularization Archives Analytics Vidhya

Difference Between L1 And L2 Regularization Implementation And Visualization In Tensorflow Lipman S Artificial Intelligence Directory

Regularization Techniques For Training Deep Neural Networks Ai Summer

Regularization Part 1 Ridge L2 Regression Youtube

Machine Learning Regularization In Simple Math Explained Data Science Stack Exchange

Regularization In Machine Learning Simplilearn

Regularization In Machine Learning Connect The Dots By Vamsi Chekka Towards Data Science